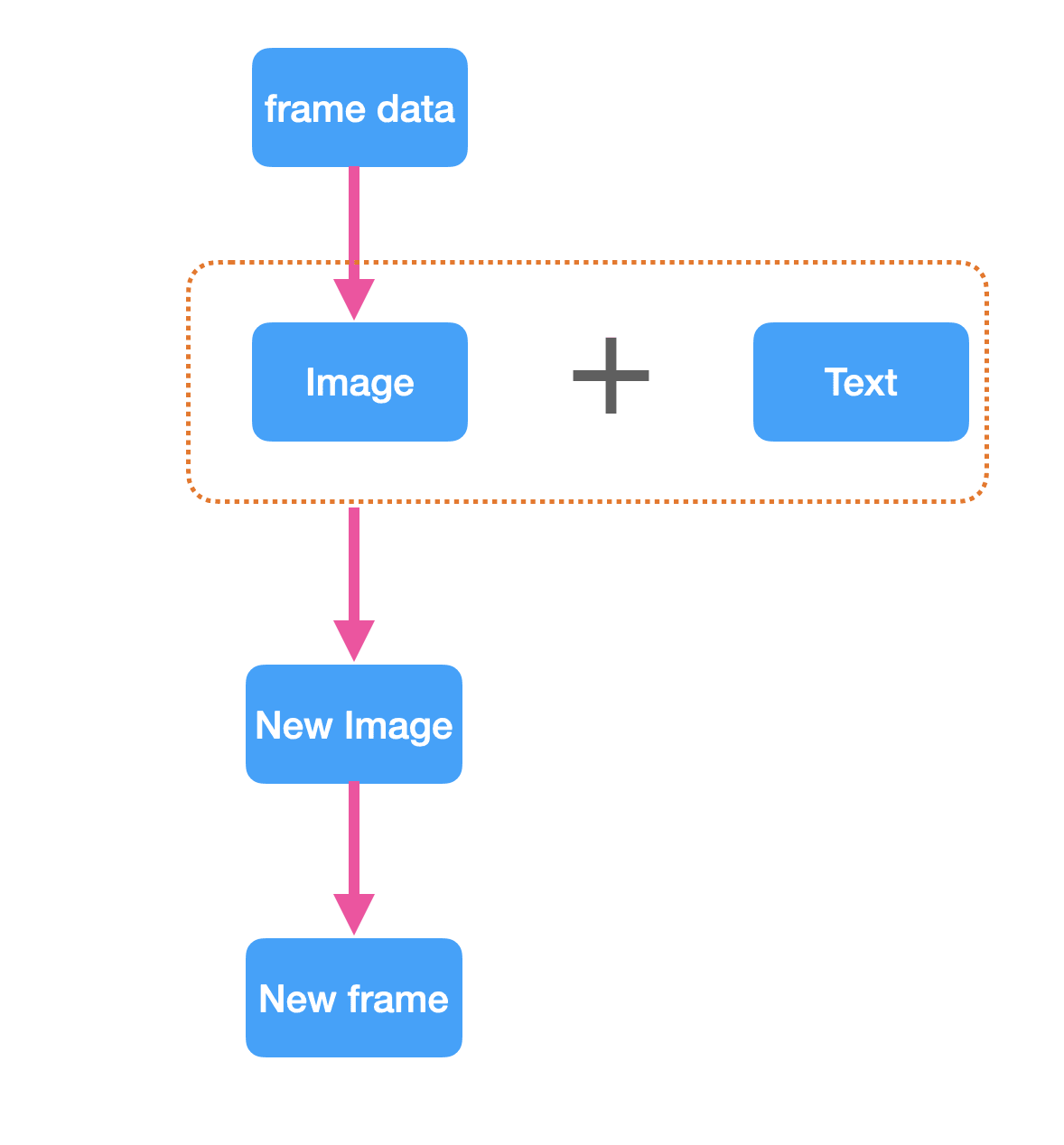

When we want to add a watermark to a video while recording it, there are two options:

A. The video frame data is converted to Bitmap, watermark is drawn on bitmap, and then the watermarked Bitmap is converted to frame data

This scheme can achieve watermark addition, although the use of RenderScript inline function to increase efficiency, but because of the frame data to bitmap and then to the frame data conversion process, the overall efficiency is still slow

B. Directly add watermark YUV data to the obtained YUV frame data, the specific steps are as follows:

1.Draw the watermark content on Bitmap in advance and convert Bitmap into byte array in YUV format

2.The video raw frame data is obtained

3.Assign the effective watermark part in the YUV array to the corresponding position of the original frame data

Let’s talk about plan B in detail:

- The watermark content is drawn to Bitmap and then converted into byte array. bitmap is white characters on black background. The reason why black background is convenient for us to synthesize YUV frame data according to color judgment

1 | private byte[] getOsdByte() { |

The above conversion is to use NV12, this YUV data format as an example to convert, it should be noted that the specific use of what data format needs to be based on the current mobile phone support Color format Decided, here is a brief explanation

2.The video frame data obtained is mainly the frame data obtained by Camera. Here, attention should be paid to Color format

Mobile MediaCodec coDEC color format is generally:

- COLOR_FormatYUV420Planar

- COLOR_FormatYUV420SemiPlanar

YUV420Planar supports two color formats: NV12 and NV21

YUV420SemiPlannesupports two color formats: I420, YV12

The corresponding relationship is as follows:

- I420: YYYYYYYY UU VV => Standard YUV420P

- YV12: YYYYYYYY VV UU => a species of YUV420P

- NV12: YYYYYYYY UVUV => Standard YUV420SP

- NV21: YYYYYYYY VUVU => a species of YUV420SP

If there is no special configuration when previewing the Camera, It returns the NV21 format by default. When the color format set by the encoder is inconsistent with the color format previewed by the Camera, it is necessary to convert the data format, otherwise it will lead to the phenomenon of blooming screen and black screen

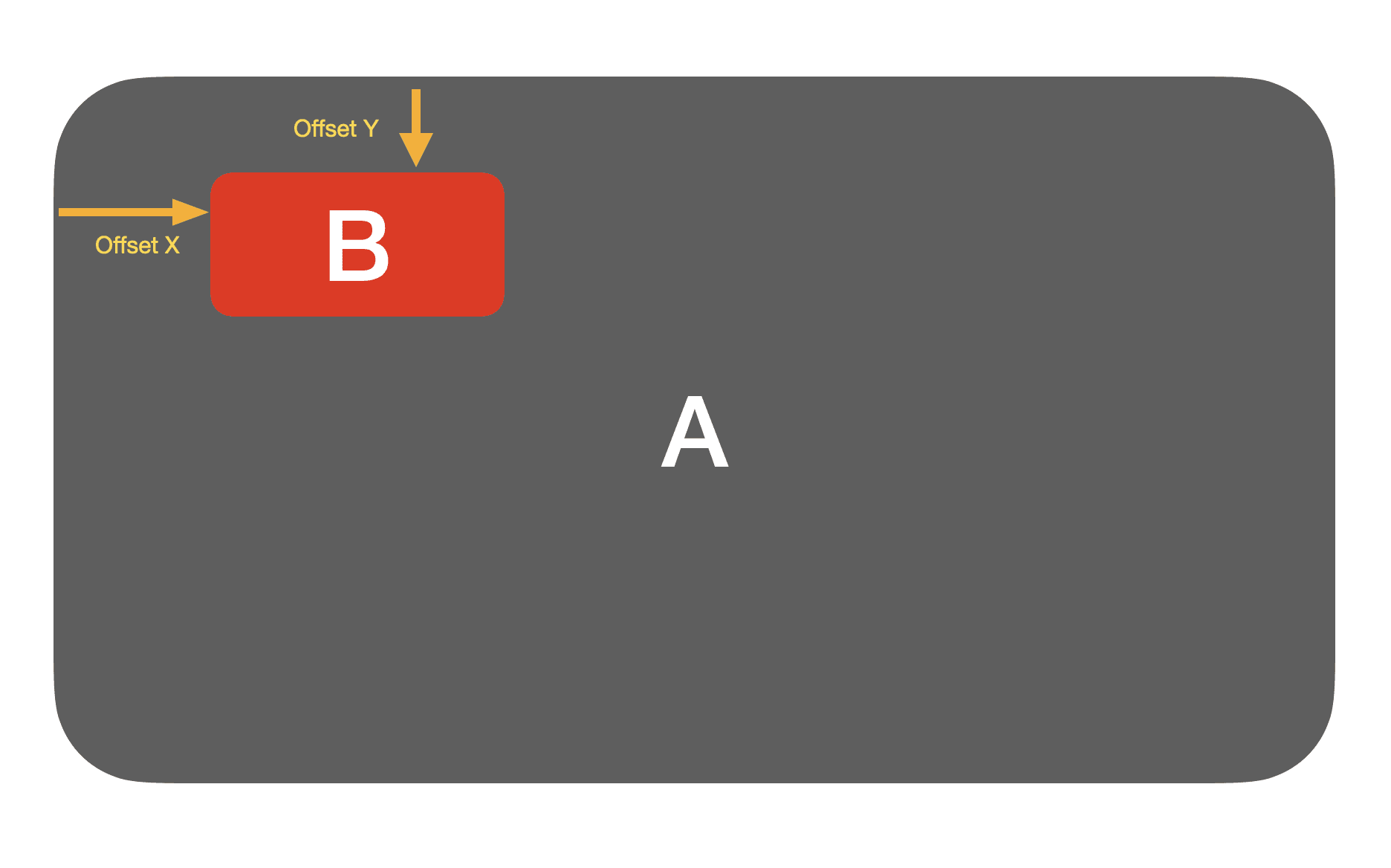

3.Effectively assign the watermark part of the YUV array to the corresponding position of the original frame data

This method is to cover the valid part of the watermark data in NV12 format (that is, the white part of the white word on black background mentioned above) to the original NV12 video frame data, and the corresponding value of array B is overwritten to array A

- offset_x is the X-axis offset of B from A

- offset_y is the Y-axis offset of B from A

1 | public static void mergeOsd(byte[] nv12_A, byte[] nv12_B, int offset_x, int offset_y, int a_width, int a_height,int b_width, int b_height) { |

In this way, array A is the frame data covered with a valid watermark and can be encoded by the encoder