PHP8 alpha1, about JIT is everyone's main concern, how it really works, what to pay attention to, and what is the performance improvement in the end?

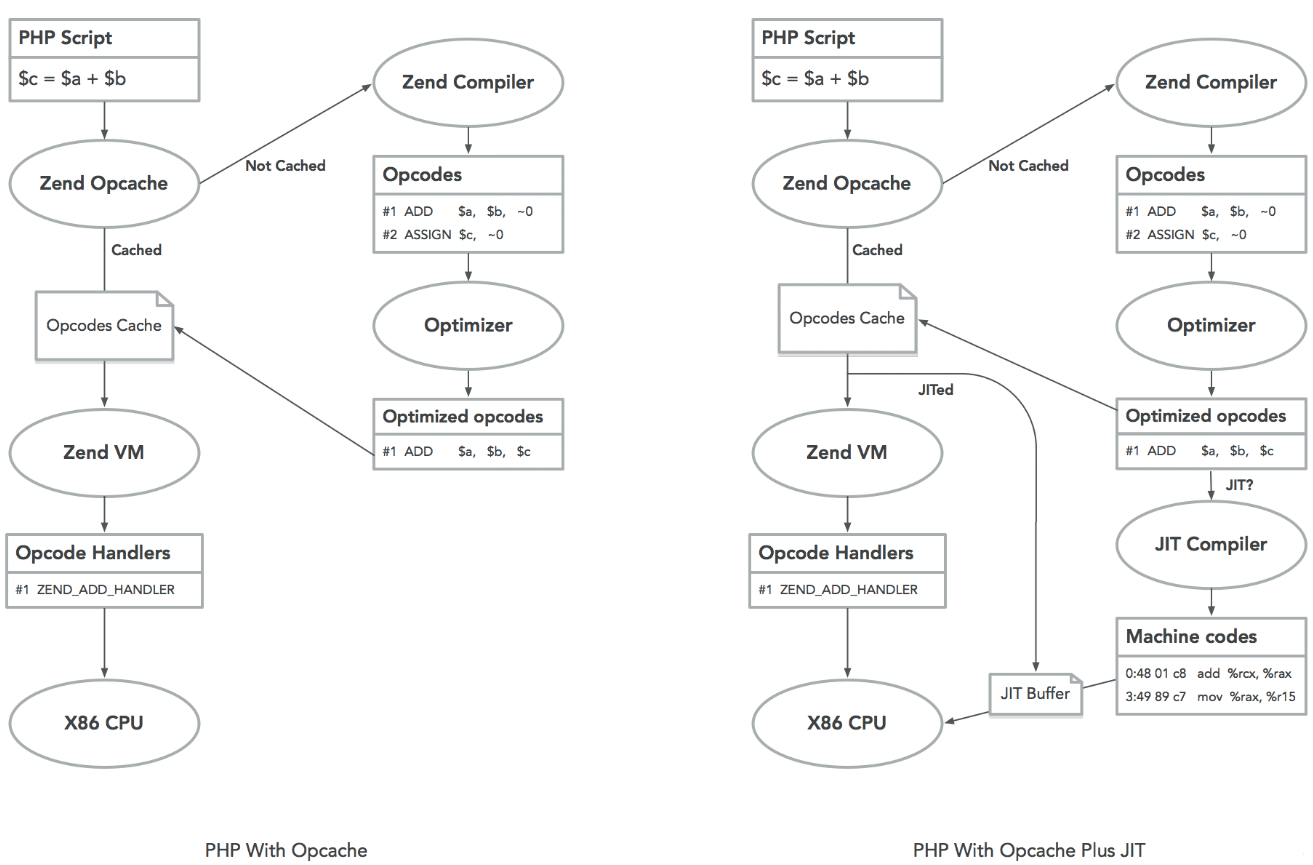

First, let's look at a graph:

The left diagram is a diagram of Opcache process before PHP8, and the right diagram is a diagram of Opcache in PHP8, you can see several key points:

-

Opcache does opcode-level optimizations, such as merging the two opcodes in the diagram into one

-

PHP8's JIT is currently provided in Opcache

-

The JIT is based on the Opcache optimizations and is optimized again with the Runtime information to generate machine code directly.

-

JIT is not a replacement for the original Opcache optimization, it is an enhancement.

-

At present, PHP8 only supports x86 architecture CPU

After downloading and installing, in addition to the original opcache configuration, we need to add the following configuration to php.ini for JIT.

opcache.jit=1205

opcache.jit_buffer_size=64MThe opcache.jit configuration looks a bit complicated, let me explain, this configuration consists of 4 separate numbers, from left to right (please note that this is based on the current alpha1 version, some configurations may be fine-tuned with subsequent versions):

-

Whether to use AVX instructions when generating machine code points, requires CPU support:

0: Not used1: Use

-

Register allocation strategy.

0: No register allocation1: Local (block) domain allocation2: Global (function) domain allocation

-

JIT trigger strategy:

0: JIT when the PHP script is loaded1: JIT when the function is executed for the first time2: JIT when the function is called the most (opcache.prof_threshold * 100) times after a single run3: When the function/method is executed more than N (N and opcache.jit_hot_func related) times after JIT4: JIT a function/method when it has in its comment5: JIT when a Trace is executed more than N times (related to opcache.jit_hot_loop, jit_hot_return, etc.)

-

JIT optimization strategy, the larger the value the greater the optimization effort:

0: No JIT1: Do JIT for the jump part between oplines2: Convergent opcode handler calls3: Do function-level JIT based on type inference4: Do function-level JIT based on type inference, procedure call graph5: Script-level JIT based on type inference, procedure call graph

Based on this, we can probably conclude the following.

Try to use the 12x5 configuration, which should be the most effective at this point

For x, 0 is recommended for scripting, and 3 or 5 for web services, depending on the test results

The form of @jit may change to <<jit>> when attributes are available

Now, let's test the difference between Zend/bench.php with and without JIT enabled, first without it (php -d opcache.jit_buffer_size=0 Zend/bench.php):

simple 0.008simplecall 0.004simpleucall 0.004simpleudcall 0.004mandel 0.035mandel2 0.055ackermann(7) 0.020ary(50000) 0.004ary2(50000) 0.003ary3(2000) 0.048fibo(30) 0.084hash1(50000) 0.013hash2(500) 0.010heapsort(20000) 0.027matrix(20) 0.026nestedloop(12) 0.023sieve(30) 0.013strcat(200000) 0.006------------------------Total 0.387

According to the above, we choose opcache.jit=1205, because bench.php is the script (php -d opcache.jit_buffer_size=64M -d opcache.jit=1205 Zend/bench.php).

simple 0.002simplecall 0.001simpleucall 0.001simpleudcall 0.001mandel 0.010mandel2 0.011ackermann(7) 0.010ary(50000) 0.003ary2(50000) 0.002ary3(2000) 0.018fibo(30) 0.031hash1(50000) 0.011hash2(500) 0.008heapsort(20000) 0.014matrix(20) 0.015nestedloop(12) 0.011sieve(30) 0.005strcat(200000) 0.004------------------------Total 0.157

As you can see, for Zend/bench.php, compared to not turning on JIT, turning it on reduces the time taken by almost 60% and improves the performance by almost 2 times.

For your research, you can use opcache.jit_debug to observe the assembly results generated by JIT, for example, for :

function simple() {$a = 0;for ($i = 0; $i < 1000000; $i++)$a++;}

We can see this with php -d opcache.jit=1205 -dopcache.jit_debug=0x01:

: ; (/tmp/1.php)sub $0x10, %rspxor %rdx, %rdxjmp .L2:add $0x1, %rdx:cmp $0x0, EG(vm_interrupt)jnz .L4cmp $0xf4240, %rdxjl .L1mov 0x10(%r14), %rcxtest %rcx, %rcxjz .L3mov $0x1, 0x8(%rcx):mov 0x30(%r14), %raxmov %rax, EG(current_execute_data)mov 0x28(%r14), %editest $0x9e0000, %edijnz JIT$$leave_functionmov %r14, EG(vm_stack_top)mov 0x30(%r14), %r14cmp $0x0, EG(exception)mov (%r14), %r15jnz JIT$$leave_throwadd $0x20, %r15add $0x10, %rspjmp (%r15):mov $0x45543818, %r15jmp JIT$$interrupt_handler

You can try to read this assembly, for example, the increment of i, you can see that the optimization is very strong, for example, because i is a local variable directly allocated in the register, the range of i inferred will not be greater than 1000000, so there is no need to determine whether the integer overflow and so on.

And if we use opcache.jit=1005, as introduced earlier, that is, without register allocation, we can get the following results:

: ; (/tmp/1.php)sub $0x10, %rspmov $0x0, 0x50(%r14)mov $0x4, 0x58(%r14)jmp .L2:add $0x1, 0x50(%r14):cmp $0x0, EG(vm_interrupt)jnz .L4cmp $0xf4240, 0x50(%r14)jl .L1mov 0x10(%r14), %rcxtest %rcx, %rcxjz .L3mov $0x1, 0x8(%rcx):mov 0x30(%r14), %raxmov %rax, EG(current_execute_data)mov 0x28(%r14), %editest $0x9e0000, %edijnz JIT$$leave_functionmov %r14, EG(vm_stack_top)mov 0x30(%r14), %r14cmp $0x0, EG(exception)mov (%r14), %r15jnz JIT$$leave_throwadd $0x20, %r15add $0x10, %rspjmp (%r15):mov $0x44cdb818, %r15jmp JIT$$interrupt_handler

You can see that the part for i is now operating in memory and not using registers.

If we use opcache.jit=1201, we can get the following result:

: ; (/tmp/1.php)sub $0x10, %rspcall ZEND_QM_ASSIGN_NOREF_SPEC_CONST_HANDLERadd $0x40, %r15jmp .L2:call ZEND_PRE_INC_LONG_NO_OVERFLOW_SPEC_CV_RETVAL_UNUSED_HANDLERcmp $0x0, EG(exception)jnz JIT$$exception_handler:cmp $0x0, EG(vm_interrupt)jnz JIT$$interrupt_handlercall ZEND_IS_SMALLER_LONG_SPEC_TMPVARCV_CONST_JMPNZ_HANDLERcmp $0x0, EG(exception)jnz JIT$$exception_handlercmp $0x452a0858, %r15djnz .L1add $0x10, %rspjmp ZEND_RETURN_SPEC_CONST_LABEL

This would be a simple introverted partial opcode handler call.

You can also try various opcache.jit strategies combined with debug configurations to observe the difference in results, or you can try various opcache.jit_debug configurations, such as 0xff, which will have more auxiliary information output.

Well, the use of JIT is briefly introduced here, about the implementation of JIT itself and other details, later there is time, I will come back to write it.

You can go to php.net now and download PHP8 to test it :)

thanks